Welcome!

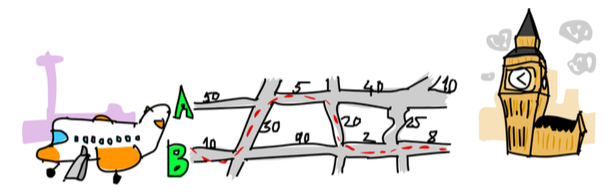

Welcome to flow, a system for defining and running parallel,

dataflow programs like this one:

Page through this book using the '>' and '<' buttons on the side of the page or navigating directly to a section using the Table of Contents on the left.

The top-level sections are:

- Installing flow

- Introduction to Flow

- Your First Flow

- Defining Flows

- flowr's context functions

- Running flows

- Debugging Flows

- The Flow Standard Library

- Example flows

- Developing Flow

- Internals of the Flow Project

Installing flow on your system

There are three main options to getting a working install of flow on your system:

- from source

- downloading a release

- homebrew tap

From Source

All pretty standard:

- clone this repo

- install pre-requisites with

make config - build and test with

make

That will leave binaries (such as flowc and flowrcli etc) in target/debug, and flowstdlib

installed into $HOME/.flow/lib.

You can use them from there or you can install using cargo install.

Downloading the latest release

From latest GitHub release download and manually install the executables for your target system:

- flowc

- flowrcli

- flowrex

- flowrgui

Then download the portable WASM flowstdlib and expand to the directory $HOME/.flow/lib/flowstdlib

Homebrew tap

A homebrew tap repo is maintained here which you can use to install with homebrew:

> brew tap andrewdavidmackenzie/dataflow

> brew install dataflow

That should install the binaries, the portable flowstdlib WASM library and be ready for running.

What is 'flow'

flow is a system for defining and running inherently parallel, data-dependency-driven 'programs'.

Wikipedia defines dataflow programs as

"dataflow programming is a programming paradigm that models a program as a directed

graph of the data flowing between operations"

which pretty much sums it up.

A flow program is created by defining a directed graph of processes that process data and that are

connected by connections.

A process can have zero or more inputs and produces zero or one output. They have no side-effects.

There is no shared-memory.

In flow a process is a generic term. A process can be a function that directly implements the

processing on data, or it can be a nested "sub-flow".

i.e. Another flow definition, that in turn may contains functions and/or other sub-flows.

When we wish to refer to them indistinctly, we will use the term process process. When distinctions

need to be made we will use function, flow or sub-flow.

Thus, a flow is an organization object, used to hierarchically organize sub-flows and functions,

and functions are what actually get work done on data.

Flows can be nested infinitely, but eventually end in functions. Functions consist of a definition

(for the compiler and the human programmer) and an implementation (for the runtime to use to process data).

The connections between processes are explicit declarations of data dependencies between them.

i.e. what data is required for a process to be able to run, and what output it produces.

Thus a flow is inherently parallel, without any further need to express the parallelism of the described

algorithm.

As part of describing the connections, I would like flow to be also visual, making the data

dependencies visible and directly visually "author-able", but this is still a work in progress and a

declarative text format for flow definitions was a step on the way and what is currently used.

Functions and sub-flows are interchangeable and nestable, so that higher level programs can be

constructed by combining functions and nested flows, making flows reusable.

I don't consider flow a "programming language", as the functionality of the program is created from the combination of functions, that can be very fine grained and implemented in many programming languages (or even assembly, WebAssembly or something else).

Program logic (control flow, loops) emerges from how the processes are "wired together" in 'flows'.

I have chosen to implement the functions included with flow (in the flowstdlib standard

library and the context functions of the flowrcli flow runner) in in rust, but they could be in other

languages.

I don't consider flow (or the flow description format) a DSL. The file format is chosen for describing

a flow in text. The file format is not important, providing it can describe the flow (processes and

connections).

I chose TOML as there was good library support for parsing it in rust and it's a bit easier on the eyes than writing JSON. I later implemented multiple deserializers, so the flow description can be in other formats (including json and yaml) and even to be able to mix and combine descriptions in multiple formats.

Q. Is it high-level or low-level?

A. "Well...yes".

The level of granularity chosen for the implementation of functions that flows are built from is arbitrary.

A function could be as simple as adding two numbers, or it could implement a complex algorithm.

Interchangeability of functions and sub-flows as processes

A number of simple primitive functions can be combined together into a flow which appears as a complex

process to the user, or it could be a complex funtion that implements the entire algorithm in code in

a single function.

The users of the process should not need to know how it is implemented. They see the process definition of it's inputs and outputs, a description of the processing it performs, and use it indistinctly.

Fundamental tenets of 'flow'?

The 'tenets', or fundamental design principles, of flow that I have strived to meet include:

No Global or shared memory

The only data within flow is that flowing on the connections between processes. There is no way to

store global state, share variables between functions nor persist data across multiple function invocations.

Pure Functions

Functions have no side-effects (except context functions which I'll describe later). Jobs for functions

are created with a set of inputs and they produce an output, and the output should only depend on the input,

along the lines of "pure" functions in Functional Programming. Thus a function should be able to be

invoked multiple times and always produce the same output. Also, functions can be executed by different

threads, processes, machines and machines architectures and always produce the same output.

This helps make flow execution predictable, but also parallelizable. Functions can be ran in parallel or interleaved without any dependency on the other functions that may have ran before, those running at the same time, or those in the future - beyond their input values.

This can enable novel tracing and debugging features also such as "time travel" (going backwards in a program) or "un-executing" a function (stepping backwards).

Encapsulation

The complexity of a process is hidden inside it's definition and you don't need to know it's implementation to know how to use it.

- Public specification of a

process: inputs and outputs for the compiler and user and a text description of what processing it performs on its inputs and what output(s) it produces, for the human programmer. - Private implementation. A

processimplementation can be afunctionimplemented in code or an entire sub-flow containing many sub-layers and eventually functions.

A process's implementation should be able to be changed, and changed from a function to a sub-flow or vice versa without affecting flow programs that use it.

Re-usability

Enabled by encapsulation. A well defined process can be used in many other flows via references to it. Facilitate the "packing" of processes (be they functions or sub-flows) for re-use by others in other flows.

Portability

The intention is that the run-time can run on many platforms. The libraries have been written to be able to compile to WASM and be portable across machines and machine architectures.

The function implementations in libraries are compiled to native for performance but also to WASM for portability. Function implementations provided by the user as part of a flow are compiled to WASM once, then distributed with the flow and run by any of the run-times, making the flow portable without re-compilation.

Polyglot

Although the compiler and runtimes are written in one language (rust), others versions could be written in other languages, there should be nothing in flow semantics or flow definition specific to one language.

Process implementations supplied with a flow could be written in any language that can compile to WASM, so it can then be distributed with the flow and then loaded and run by any run-time implementation.

Functional Decomposition

Enable a problem to be decomposed into a number of communicating processes, and those in turn can be decomposed and so on down in a hierarchy of processes until functions are used. Thus the implementation is composed of a number of processes, some of which maybe reused from elsewhere and some specific to the problem being solved.

Structured Data

Data that flows between processes can be defined at a high-level, but consist of a complex structure or multiple levels of arrays of data, and processes and sub-processes can select sub-elements as input for their processing.

Inherently Parallel

By making data dependencies between processes the basis of the definition of a flow, the non-parallel aspects of a flow (when one process depends on data from a previous process) are explicit, leading to the ability to execute all processes that can execute (due to the availability of data for them to operate on) at any time, in parallel with other executions of other processes, or of other instances of the same process.

The level of concurrency in a flow program depends only on the data structures used and the connections between the processes that operate on them. Then the level of parallelism exploited in its execution depends on the resources available to the flow runner program running the flow.

Distributable

As the functions are pure, and only depend on their inputs, they maybe executed across threads, cores, processes, machines and (via portability and WASM) even a heterogeneous network of machines of different CPU architectures and operating systems.

Separate the program from the context

There is an explicit separation between the flow program itself, and the environment in which it runs.

Flows contain only pure functions, but they are run by a "flow runner" program (such as flowrcli) that

provides "impure" context functions for interacting with the context in which it is runs, for things

like STDIO, File System, etc.

Efficiency

When there is no data to process, no processes are running and the flow and the flow runner program running it are idle.

Project Components and Structure

Here is a summary of the project components, their purpose and a link to their README.md:

- flowcore - A set of core structs and traits used by

flowrandflowcplus code to fetch content from file/http and resolve library (lib://) references. - flowmacro - A macro used to help write function implementation code that compile natively and to wasm

- flowc - The

flowcflow compiler binary is a CLI built aroundflowrclibthat takes a number of command line arguments and source files or URLs and compiles the flow or library referenced.flowrclibis the library for compiling flow program and library definitions from toml files, producing generated output projects that can be run byflowrcliorflowrgui.

- flowrlib - The flow runner library that loads and executes compiled flows.

- flowr - The

flowrflow runner binary that can be used to run and debug flows compiled with a flow compiler such asflowc. - flowrex -

flowrexis a minimal flow job executor, intended for use across the network associated withflowrcliorflowgui(above). - flowstdlib - the flow "standard library" which contains a set of functions that can be used by flows being defined by the user

- examples - A set of examples flows that can be run

The Inspirations for 'flow'

I have had many sources of inspiration in this area over the past three decades.

Without realizing it they started to coalesce in my head and seemingly unrelated ideas from very different areas started to come together to form what I eventually called 'flow' and I started to work on it.

The impetus to actually implement something, instead of just thinking about it, came when I was looking for some "serious", more complex, project in order to learn rust (and later adding WebAssembly to the mix).

It should be noted, that this project was undertaken in a very "personal" (i.e. idiosyncratic) way, without any formal background in the area of functional programming, data flow programming, communicating serial processes or similar. When starting it, I wanted to see if any of my intuitions and ideas could work, ignoring previous efforts or established knowledge and previous projects. I didn't want to get "sucked in" to just re-implementing someone else's ideas.

I have done quite a bit of reading of paper on these areas after getting a reasonable version of flow working

and saw I was repeating a number of existing ideas and techniques..no surprise!

Specific inspirations from my career

I have worked with these technologies listed below over the decares (from University until now) and they all added something to the idea for flow in my head.

- The Inmos transputer chip and its

Occam parallel programming

language (which I studied at University in the '80's), without realizing that this was based on Hoare's CSP.

- Parallel programming language (although not based on data dependencies)

- Parallel hardware 8and software processes) that communicated by sending messages over connections (some virtual in software, others over hardware between chips)

- Structured Analysis and Design from

my work with it in HP the '80s!

- Hierarchical functional decomposition

- Encapsulation

- Separation of Program from Context

- UNIX pipes

- Separate processes, each responsible for limited functionality, communicating in a pipeline via messages (text)

- Trace scheduling for compiler instruction scheduling based on data

dependencies between instructions (operations) work done at MultiFlow and

later HP by Josh Fisher, Paolo Faraboschi and others.

- Exploiting inherent parallelism by identifying data dependencies between operations

- Amoeba distributed OS by Andrew Tannenbaum that made a

collaborating network of computers appear as one to the user of a "Workstation"

- Distribution of tasks not requiring "IO", abstraction of what a machine is and how a computer program can run

- Yahoo! Pipes system for building "Web Mashups"

- Visual assembly of a complex program from simpler process by connecting them together with data flows

Non-Inspirations

There are a number of things that you might suspect were part of my set of inspirations for creating 'flow', or maybe you think I even copied the idea from them, but that in fact (you'll have to trust me on this one) is not true.

I didn't study Computer Science, and if I had I may well have been exposed to some of these subjects a long-time ago. That would probably have saved me a lot of time.

But, then I would have been implementing someone else's ideas and not (what I thought were) my own. Think of all the satisfaction I would have lost out on while re-inventing thirty to forty year-old ideas!

While implementing the first steps of 'flow' I started to see some materials come up in my Internet searches, that looked like they could be part of a theory of the things I was struggling with. The Main one would be Hoare's 1976 paper on the "Theory of Communicating Sequential Processes" (or CSP for short).

It turns out some of that based work was the basis for some of my inspirations (e.g. Inmos Transputer and Occam language), unbeknownst to me.

But I decided to deliberately ignore them as I worked out my first thoughts, did the initial implementation and got some simple examples up and running!

Later, I looped back and read some papers, and confirmed most of my conjectures.

I got a bit bored with the algebra approach to it (and related papers) though and didn't read or learn too much.

One Hoare paper refers more to a practical implementation, and does hit on a number of the very subjects I was struggling with, such as the buffering (or not) of data on "data flows" between functions (or processes in his terms).

Once I progress some more, I will probably go back and read more of these papers and books and find solutions to the problems I have struggled to work out on my own - but part of the purpose of this project for me is the intellectual challenge to work them out for myself, as best as I can.

Parallelism

Using flow algorithms can be defined that exploit multiple types of parallelism:

- Data Parallelism

- Pipelining

- Divide and Conquer

Data Parallelism

Also known as "Single Program Multiple Data".

In this case the data is such that it can be segmented and worked on in parallel, using the same basic algorithm for each chunk of data.

An example would be some image processing or image generation task, such as generating the mandlebrot set

(see the mandlebrot example in flowr/examples).

The two-dimensional space is broken up into a 2D Array of pixels or points, and then they are streamed through a function or sub-flow that does some processing or calculation, producing a new 2D Array of output values.

Due to the data-independence between them, all of them can be calculated/processed in parallel, across many threads or even machines, exploiting the inherent parallelism of this "embarrassingly parallel" algorithm.

They need to be combined in some way to produce the meaningful output. This could be using an additional sub-flow to combine them (e.g. produce an average intensity or color of an image), that is not parallel, or it could be to render them as an image for the user.

In the case of producing a file or image for the user, functions can be used for that from

the flow runner's context functions leaving the flow itself totally parallel.

In a normal procedural language, an image would be rendered in memory in a 2D block of pixels and then written out to file sequentially so that the pixels are placed in the correct order/location in the file.

In a flow program, that could be gone, although accumulating the 2D array in memory may represent a bottleneck.

flowrcli's image buffer context function is written such that it can

accept pixels in any random order and render them correctly, but having the following inputs:

### Inputs

* `pixel` - the (x, y) coordinate of the pixel

* `value` - the (r, g, b) triplet to write to the pixel

* `size` - the (width, height) of the image buffer

* `filename` - the file name to persist the buffer to

Map Reduce

Map-Reduce is done similar to above, using a more complex initial step to form independent data "chunks" ("Mapping") that can be processed totally in parallel, and a combining phase ("Reducing) to produce the final output.

Pipelining

A flow program to implement pipeline processing of data is trivial and there is a

pipeline example inflowr/examples.

A series of processes (they can be functions or subflows) are defined. Input data is connected to flow

to the first, whose output is sent to the second, and so on and the output rendered for the user.

When multiple data values are sent in in short succession (additional values are sent before the first value has propagated out of the output) then multiple of the processes can run in parallel, each one operating on a different data value, as there is no data or processing dependency between the data values.

If there are enough values (per unit time) to demand it, multiple instances of the same processes can be used to increase parallelism, doing the same operation multiple times in parallel on different data values.

Divide and Conquer

Just as in procedural programming, a large problem can be broken down into separate pieces and

programmed separately, this can be done with flow.

A complex problem could be broken down into two (say) largely independent sub-problems. Each one can be programmed in different sub-flows, and fed different parts (or copies) of the input data. Then when both produce output they can be combined in some way for the user.

As there is no data dependency between the sub-flow outputs (intermediate values in the grander scheme of things) they can run totally in parallel.

If the two values were just need to be output to the user, then they can each proceed at their own pace (in parallel) and each one output when complete. In this case the order of the values in the output to the user might vary, and appropriate labelling to understand them will be needed.

Depending on how the values need to be combined, or if a strict order in the output is required, then a later ordering or combining step maybe needed. This step will necessarily depends on both sub-flow's output value, thus introducing a data dependency and this final step will operate without parallelism.

Providing the final (non-parallel step) is less compute intensive than the earlier steps, an overall gain can be made by dividing and conquering (and then combining).

Status

The semantics of flows, processes and connections along with the implementation of the flowc compiler, flowrcli

runner, context functions and the flowstdlib library has allowed for the

creation of a set of example flows that execute as expected.

There has pretty good overall test coverage (> 84%) that allows for safer refactoring.

The book is reasonably extensive but can always be improved. They probably need "real users" (not the author) to try to use them and flow to make the next round of improvements. There are issues in the repo and the project related to improving the book.

I have added an experimental GUI for running flows in flowrgui that uses the rust Iced GUI toolkit.

First flow

Without knowing anything about flow and its detailed semantics you might be able to guess what this flow

below does when executed and what the output to STDOUT will be.

It is a fibonacci series generator.

Understanding the flow

NOTE:You can find a complete description of flow semantics in the next section Defining Flows

Root flow

All flows start with a root "flow definition". Other sub-flows can be nested under the root, via references to separate flow description files, to enable encapsulation and flow reuse.

In this case it is the only one, and no hierarchy of flows descriptions is used or needed.

You can see the TOML root flow definition for this flow in the flowrcli crate's fibonacci example.

root.toml

Interaction with the execution environment

The root defines what the interaction with the surrounding execution environment is,

such as Stdout, or any other context function provided by the flow runtime

being used (e.g. flowrcli).

The only interaction with the execution environment in this example is the use of stdout to print the numbers

in the series to the Terminal.

Functions

Functions are stateless, and pure, and just take a set of inputs (one on each of its inputs) and produce an output.

When all the inputs of a function have a value, then the function can run and produce an output, or not

produce outputs, as in the case of the impure stdout function.

This flow uses two functions (shown as orange ovals):

stdoutfrom thecontext functionsas described abovestdoutonly has one, unnamed, default input and no outputs. It will print the value on STDOUT of the process running the flow runner (flowrcli) that is executing the flow.

- the

addfunction from the flow standard libraryflowstdlibto add two integers together.addhas two inputs "i1" and "i2" and produces the sum of them on the only, unnamed, "default" output.

Connections

Connections (the solid lines) take the output of a function when it has ran, and send it to the input of connected functions. They can optionally have a name.

When a functions has ran, the input values used are made available again at the output.

In this case the following three connections exist:

- "i2" input value is connected back to the "i1" input.

- the output of "add" (the sum of "i1" and "i2") is connected back to the "i2" inputs. This connection has optionally been called "sum"

- the output of "add" (the sum of "i1" and "i2") is connected to the default input of "Stdout". This connection has optionally been called "sum"

Initializations

Inputs of processes (flows or functions) can be initialized with a value "Once" (at startup) or "Always" (each time it ran) using input initializers (dotted lines)

In this example two input initializers are used to setup the series calculation

- "Once" initializer with value "1" in the "i2" input of "add"

- "Once" initializer with value "0" in the "i1" input of "add"

Running the flow

This flow exists as an example in the flowr/examples/fibonacci folder. See the

root.toml root flow definition file

You can run this flow and observe its output from the terminal, while in the flow project root folder:

> cargo run -p flowc -- -C flowr/src/bin/flowrcli flowr/examples/fibonacci

flowc will compile the flow definition from the root flow definition file (root.toml) using the context functions

offered by flowrcli (defined in the flowr/src/bin/flowrcli/context folder) to generate a manifest.json compiled flow manifest in the

flowr/examples/fibonacci folder.

flowc then runs flowrcli to execute the flow.

flowrcli is a Command Line flow runner and provides implementations for context functions to read and write to stdio (e.g. stdout).

The flow will produce a fibonacci series printed to Stdout on the terminal.

> cargo run -p flowc -- -C flowr/src/bin/flowrcli flowr/examples/fibonacci

Compiling flowstdlib v0.6.0 (/Users/andrew/workspace/flow/flowstdlib)

Finished dev [unoptimized + debuginfo] target(s) in 1.75s

Running `target/debug/flowc flowr/examples/first`

1

2

3

5

8

...... lines deleted ......

2880067194370816120

4660046610375530309

7540113804746346429

Step-by-Step

Here we walk you through the execution of the previous "my first flow" (the fibonacci series example).

Compiled flows consist of only functions, so flow execution consists of executing functions, or more precisely, jobs formed from a set of inputs, and a reference to the function implementation.

Init

The flow manifest (which contains a list of Functions and their output connections) is loaded.

Any function input that has an input initializer on it, is initialized with the value provided in the initializer.

Any function that has either no inputs (only context funcitons are allowed to have no inputs, such as Stdin) or

has a value on all of its inputs, is set to the ready state.

Execution Loop

The next function that is in the ready state (has all its input values available, and is not blocked from sending its output by other functions) has a job created from its input values and the job is dispatched to be run.

Executors wait for jobs to run, run them and then return the result, that may or may not contain an output value.

Any output value is sent to all functions connected to the output of the function that the job ran for. Sending an input value to a function may make that function ready to run.

The above is repeated until there are no more functions in the ready state, then execution has terminated and the flow ends.

Specific Sequence for this example

Below is a description of what happens in the flor runtime to execute the flow.

You can see log output (printed to STDOUT and mixed with the number series output) of what is happening using

the -v, verbosity <Verbosity Level> command line option to flowrcli.

- Values accepted (from less to more output verbosity) are:

error(the default),warn,infodebugandtrace.

Init:

- The "i2" input of the "add" function is initialized with the value 1

- The "ii" input of the "add" function is initialized with the value 0

- The "add" function has a value on all of its inputs, so it is set to the ready state

- STDOUT does not have an input value available so it is not "ready"

Loop Starts

Ready = ["add"]

- "add" runs with Inputs = (0, 1) and produces output 1

- value 1 from output of "add" is sent to input "i2" of "add"

- "add" only has a value on one input, so is NOT ready

- value 1 from output of "add" is sent to default (only) input of "Stdout"

- "Stdout" has a value on all of its (one) inputs and so is marked "ready"

- input value "i2" (1) of the executed job is sent to input "i1" of "add"

- "add" now has a value on both its inputs and is marked "ready"

- value 1 from output of "add" is sent to input "i2" of "add"

Ready = ["Stdout", "add"]

- "Stdout" runs with Inputs = (1) and produces no output

- "Stdout" converts the

numbervalue to aStringand prints "1" on the STDOUT of the terminal - "Stdout" no longer has values on its inputs and is set to not ready

- "Stdout" converts the

Ready = ["add"]

- "add" runs with Inputs = (1, 1) and produces output 2

- value 2 from output of "add" is sent to input "i2" of "add"

- "add" only has a value on one input, so is NOT ready

- value 2 from output of "add" is sent to default (only) input of "Stdout"

- "Stdout" has a value on all of its (one) inputs and so is marked "ready"

- input value "i2" (1) of the executed job is sent to input "i1" of "add"

- "add" now has a value on both its inputs and is marked "ready"

- value 2 from output of "add" is sent to input "i2" of "add"

Ready = ["Stdout", "add"]

- "Stdout" runs with Inputs = (2) and produces no output

- "Stdout" converts the

numbervalue to aStringand prints "2" on the STDOUT of the terminal - "Stdout" no longer has values on its inputs and is set to not ready

- "Stdout" converts the

Ready = ["add"]

The above sequence proceeds, until eventually:

addfunction detects a numeric overflow in the add operation and outputs no value.- No value is fed back to the "i1" input of add

- "add" only has a value on one input, so is NOT ready

- No value is sent to the input of "Stdout"

- "Stdout" no longer has values on its inputs and is set to not ready

- No value is fed back to the "i1" input of add

Ready = []

No function is ready to run, so flow execution ends.

Resulting in a fibonacci series being output to Stdout

1

2

3

5

8

...... lines deleted ......

2880067194370816120

4660046610375530309

7540113804746346429

Debugging your first flow

Command line options to flowc

When running flowc using cargo run -p flowc you should add -- to mark the end of the options passed to cargo,

and the start of the options passed to flowc

You can see what they are using --help producing output similar to this:

cargo run -p flowc -- --help

Finished dev [unoptimized + debuginfo] target(s) in 0.12s

Running 'target/debug/flowc --help'

flowc 0.8.8

USAGE:

flowc [FLAGS] [OPTIONS] [--] [ARGS]

FLAGS:

-d, --dump Dump the flow to .dump files after loading it

-z, --graphs Create .dot files for graph generation

-h, --help Prints help information

-p, --provided Provided function implementations should NOT be compiled from source

-s, --skip Skip execution of flow

-g, --symbols Generate debug symbols (like process names and full routes)

-V, --version Prints version information

OPTIONS:

-L, --libdir <LIB_DIR|BASE_URL>... Add a directory or base Url to the Library Search path

-o, --output <OUTPUT_DIR> Specify a non-default directory for generated output. Default is $HOME/.flow/lib/{lib_name} for a library.

-i, --stdin <STDIN_FILENAME> Read STDIN from the named file

-v, --verbosity <VERBOSITY_LEVEL> Set verbosity level for output (trace, debug, info, warn, error (default))

ARGS:

<FLOW> the name of the 'flow' definition file or library to compile

<flow_args>... Arguments that will get passed onto the flow if it is executed

Command line options to flowrcli

By default flowc uses flowrcli to run the flow once it has compiled it. Also it defaults to passing the -n/--native

flag to flowrcli so that flows are executed using the native implementations of library functions.

In order to pass command line options on to flowrcli you separate them from the options to flowc after another -- separator.

flowrcli accepts the same -v/--verbosity verbosity options as flowc.

Getting debug output

If you want to follow what the run-time is doing in more detail, you can increase the verbosity level (default level is ERROR)

using the -v/--verbosity option.

So, if you want to walk through each and every step of the flow's execution, similar to the previous step by step section

then you can do so by using -v debug and piping the output to more (as there is a lot of output!):

cargo run -p flowc -- flowr/examples/fibonacci -- -v debug| more

which should produce output similar to this:

INFO - 'flowr' version 0.8.8

INFO - 'flowrlib' version 0.8.8

DEBUG - Loading library 'context' from 'native'

INFO - Library 'context' loaded.

DEBUG - Loading library 'flowstdlib' from 'native'

INFO - Library 'flowstdlib' loaded.

INFO - Starting 4 executor threads

DEBUG - Loading flow manifest from 'file:///Users/andrew/workspace/flow/flowr/examples/fibonacci/manifest.json'

DEBUG - Loading libraries used by the flow

DEBUG - Resolving implementations

DEBUG - Setup 'FLOW_ARGS' with values = '["my-first-flow"]'

INFO - Maximum jobs dispatched in parallel limited to 8

DEBUG - Resetting stats and initializing all functions

DEBUG - Init: Initializing Function #0 '' in Flow #0

DEBUG - Input initialized with 'Number(0)'

DEBUG - Input initialized with 'Number(1)'

DEBUG - Init: Initializing Function #1 '' in Flow #0

DEBUG - Init: Creating any initial block entries that are needed

DEBUG - Init: Readying initial functions: inputs full and not blocked on output

DEBUG - Function #0 not blocked on output, so added to 'Ready' list

DEBUG - =========================== Starting flow execution =============================

DEBUG - Job #0:-------Creating for Function #0 '' ---------------------------

DEBUG - Job #0: Inputs: [[Number(0)], [Number(1)]]

DEBUG - Job #0: Sent for execution

DEBUG - Job #0: Outputs '{"i1":0,"i2":1,"sum":1}'

DEBUG - Function #0 sending '1' via output route '/sum' to Self:1

DEBUG - Function #0 sending '1' via output route '/sum' to Function #1:0

DEBUG - Function #1 not blocked on output, so added to 'Ready' list

DEBUG - Function #0 sending '1' via output route '/i2' to Self:0

DEBUG - Function #0, inputs full, but blocked on output. Added to blocked list

DEBUG - Job #1:-------Creating for Function #1 '' ---------------------------

DEBUG - Job #1: Inputs: [[Number(1)]]

DEBUG - Function #0 removed from 'blocked' list

DEBUG - Function #0 has inputs ready, so added to 'ready' list

DEBUG - Job #1: Sent for execution

DEBUG - Job #2:-------Creating for Function #0 '' ---------------------------

DEBUG - Job #2: Inputs: [[Number(1)], [Number(1)]]

1

DEBUG - Job #2: Sent for execution

DEBUG - Job #2: Outputs '{"i1":1,"i2":1,"sum":2}'

DEBUG - Function #0 sending '2' via output route '/sum' to Self:1

DEBUG - Function #0 sending '2' via output route '/sum' to Function #1:0

DEBUG - Function #1 not blocked on output, so added to 'Ready' list

DEBUG - Function #0 sending '1' via output route '/i2' to Self:0

DEBUG - Function #0, inputs full, but blocked on output. Added to blocked list

Defining Flows

We will describe the syntax of definitions files, but also the run-time semantics of flows, functions, jobs, inputs etc in order to understand how a flow will run when defined.

A flow is a static hierarchical grouping of functions that produce and consume data, connected via connections into a graph.

Root Flow

All flows have a root flow definition file.

The root flow can reference functions provided by the "flow runner" application that will execute the flow,

for the purpose of interacting with the surrounding environment (such as file IO, standard IO, etc).

These are the context functions.

The root flow (as any sub-flow can) may include references to sub-flows and functions, joined by connections between their inputs and outputs, and so on down in a hierarchy.

The root flow cannot have any input or output. As such, all data flows start or end in the root flow. What you

might consider "outputs", such as printing to standard output, is done by describing a connection to a context functions that interacts with the environment.

Flows in General

Any flow can contain references to functions it uses, plus zero or more references to nested flows via Process References, and so on down.

Data flows internally between sub-flows and functions (collectively known as "processes"), as defined by the connections.

All computation is done by functions. A flow is just a hierarchical organization method that allows to group and abstract groups of functions (and sub-flows) into higher level concepts. All data that flows originates in a function and terminates in a function.

flow and sub-flow nesting is just an organizational technique to facilitate encapsulation and re-use of functionality, and does not affect program semantics.

Whether a certain process in a flow is implemented by one more complex function - or by a sub-flow combining multiple, simpler, functions - should not affect the program semantics.

Valid Elements of a flow definition

Valid entries in a flow definition include:

flow- A String naming this flow (obligatory)docs- An optional name of an associated markdown file that documents the flowversion- A SemVer compatible version number for this flow (Optional)authors- Array of Strings of names and emails of authors of the flow (Optional)input|output- 0 or more input/outputs of this flow made available to any parent including it (Note: that the root flow may not contain any inputs or outputs). See IOs for more details.process- 0 or more references to sub-processes to include under the current flow. A sub-process can be anotherflowor afunction. See Process References for more details.connection- 0 or more connections between io of sub-processes and/orioof this flow. See Connections for more details.

Complete Feature List

The complete list of features that can be used in the description of flows is:

- Flow definitions

- Named inputs and outputs (except root flow which has no parent)

- References to sub-processes to use them in the flow via connections

- Functions

- Provided functions

- Library functions

- Context functions

- Sub-flows

- Arbitrarily from the file system or the web

- From a library

- Initializers for sub-process inputs and the flow outputs

Onceinitializers that initialize the input/output with a value just once at the start of flow executionAlwaysinitializers that initialize the input/output every time it is emptied by the creation of a job that takes the value.

- Use of aliases to refer to sub-process with different names inside a flow, facilitating the use of the same function or flow multiple times for different purposes within the sub-flow

- Functions

- Connections between outputs and inputs within a flow

- Connections can be formed between inputs to flow or outputs of one process (function or flow) and outputs of the flow or inputs of a process

- Multiple connections from a source

- Multiple connections to a destination

- Connection to/from a default input/output by just referencing the process in the connection

- Destructuring of output struct in a connection to just connect a sub-part o fit

- Optional naming of a connection to facilitate debugging

- Function definitions

- With just inputs

- With just outputs

- With inputs and outputs

- default single input/output, named single input/output, named multiple inputs/outputs

- author and versioning meta-data and references to the implementation

- Libraries of processes (functions and flows) can be built and described, and referenced in flows

Name

A string used to identify an element.

IO

IOs produce or consume data of a specific type, and are where data enters/leaves a flow or function (more generally referred to as "processes").

name- used to identify an input or output in connections to/from ittype(optional) - An optional Data type for this IO

Default inputs and outputs

If a function only has one input or one output, then naming that input/output is optional. If not named, it is referred to as the default input. Connections may connect data to/from this input/output just by referencing the function.

Generic Inputs or Outputs

If an input or output has no specific Data type specified, then it is considered generic and can

take inputs of any type. What the function does, or what outputs it produces, may vary depending on the input

type at runtime and should be specified by the implementor of the function and understood by the flow programmer

using it.

Example: A print function could accept any type and print out some human readable representation of all of them.

Example: An add function could be overloaded and if provided two numbers it would sum them, but if provided

two strings it could concatenate them.

Process Reference

Flows may reference a another flow or a function (generically referred to as a process) which is defined in a

separate definition file. These are "process references"

Process Reference Fields

source- A Url (or relative path) of a file/resource where the process is defined.

For example, here we reference a process called stdout (see context functions)

[[process]]

source = "context://stdio/stdout"

This effectively brings the function into scope with the name stdout and it can then be used in connections

as a source or destination of data.

Alias for a Process Reference

alias- an alias to use to refer to a process in this flow.- This can be different from the

namedefined by the process itself - This can be used to create two or more instances of a process in a flow, and the ability to refer to them separately and distinguish them in connections.

- This can be different from the

For example, here the process called add is aliased as sumand then can be referred to using sumin

connections.

[[process]]

alias = "sum"

source = "lib://flowstdlib/math/add"

Source Url formats

The following formats for the source Url are available:

- No "scheme" in the URI -->

file:is assumed. If the path starts with/then an absolute path is used. If the path does not start with/then the path is assumed to be relative to the location of the file referring to it. file:scheme --> look for process definition file on the local file systemhttp:orhttps:scheme --> look for process definition file on a the weblib:--> look for process in a Library that is loaded by the runtime. See flow libraries for more details on how this Url is used to find the process definition file provided by the library.context:--> a reference to a function in the context, provided by the runner application. See context functions for more details on how the process definition file is used.

File source

This is the case when no scheme or the file:// scheme is used in the source Url.

The process definition file is in the same file system as the file referencing it.

- in the flow's directories, using relative file paths

- e.g.

source = "my_function" - e.g.

source = "my_flow" - e.g.

source = "subdir/my_other_function" - e.g.

source = "subdir/my_other_process"

- e.g.

- in a different flow's directories, using relative file paths

- e.g.

source = "../other_flow/other_function" - e.g.

source = "../other_flow/other_flow"

- e.g.

- elsewhere in the local file system, using absolute paths

- e.g.

source = "/root/other_directory/other_function" - e.g.

source = "/root/other_directory/other_flow"

- e.g.

Web Source

When the http or https Url scheme is used for source the process definition file is loaded via http request

to the specified location.

- e.g.

source = "http://my_flow_server.com/folder/function" - e.g.

source = "https://my_secure_flow_server.com/folder/flow"

Initializing an input in a reference

Inputs of a referenced process may be initialized, in one of two ways:

once- the value is inserted into the input just once on startup and there after it will remain empty if a value is not sent to it from a Process.always- the value will be inserted into the input each time after the process runs.

Example, initializing the add function's i1 and ì2 inputs to 0 and 1 respectively, just once at the start

of the flow's execution.

[[process]]

source = "lib://flowstdlib/math/add"

input.i1 = { once = 0 }

input.i2 = { once = 1 }

Example, initializing the add function's i1 input to 1 every time it runs. The other input is free to be

used in connections and this effectively makes this an "increment" function that adds one to any value sent to it

on the i2 input.

[[process]]

source = "lib://flowstdlib/math/add"

input.i1 = { always = 1 }

Initializing the default input

When a process only has one input, and it is not named, then you can refer to it by the name default for the

purposes of specifying an initializer

Example, initializing the sole input of stdout context function with the string "Hello World" just once at

the start of flow execution:

[[process]]

source = "context://stdio/stdout"

input.default = {once = "Hello World!"}

Function Definitions

A function is defined in a definition file that should be alongside the function's implementation files (see later)

Function Definition Fields

function- Declares this files is defining a function and defines the name of the function. This is required to link the definition with the implementation and allow the flow compiler to be able to find the implementation of the function and to include it in the generated project.namemust match exactly the name of the object implemented.source- the file name of the file implementing the function, relative to the location of the definition filedocs- a markdown file documenting the function, relative to the location of the definition fileinput- zero (for impure)|one (for pure) or more inputs (as per IO)output- zero (for impure)|one (for pure) or more outputs (as per IO)impure- optional field to define an impure function

Types of Function Definitions

Functions may reside in one of three locations:

- A

context functionprovided by a flow running applications, as part of a set of functions it provides to flows to allow them to interact with the environment, user etc. E.g.readlineto read a line of text from STDIN. - A

library functionprovided by a flow library, that a flow can reference and then use to help define the overall flow functionality. E.g.addfrom theflowstdliblibrary to add two numbers together. - A

provided functionwhere the function's definition and implementation are provided within the flow hierarchy. As such they cannot be easily re-used by other flows.

Impure (or context) functions

An impure function is a a function that has just a source of data (e.g. stdin that interacts with the execution

environment to get the data and then outputs it) or just a sink of data (e.g. stdout that takes

an input and passes it to the execution environment and produces no output in the flow).

The output of an impure function is not deterministic based just on the inputs provided to it but depends on the system or the user using it. It may have side-effects on the system, such as outputting a string or modifying a file.

In flow these are referred to as context functionsbecause they interact with (and are provided by) the

execution context where the flow is run. For more details see context functions

Impure functions should only be defined as part of a set of context functions, not as a function in a

library nor as a provided function within a flow.

Impure functions should declare themselves impure in their definition file using the optional impure field.

Example, the stdin context function declares itself impure

function = "stdin"

source = "stdin.rs"

docs = "stdin.md"

impure = true

...

Pure functions

Functions that are used within a flow (whether provided by the flow itself or from a library) must be pure

(not depend on input other than the provided input values nor have no side-effects in the system) and have

at least one input and one output.

- If they had no input, there would be no way to send data to it and it would be useless

- If it had no output, then it would not be able to send data to other functions and would also be useless

Thus, such a pure function can be run anytime, anywhere, with the same input and it will produce the same

output.

Function execution

Functions are made available to run when a set of inputs is available on all of its inputs. Then a job is created containing one set of input values (a value taken from each of it's inputs) and sent for execution. Execution may produce an output value, which using the connections defined, will be passed on to the connected input of one or more other functions in the function graph. That in turn may cause that other function to run and so on and so forth, until no function can be found available to run.

Default inputs and outputs

If a function only has one input or one output, then naming that input/output is optional. If not named, it is referred to as the default input. Connections may connect data to/from this input/output just by referencing the function.

Types

By default flow supports JSON types:

nullbooleanobjectarraynumberstring

Connection

Connections connect a source of data (via an IO Reference) to a sink of data (via an IO Reference) of a compatible type within a flow.

name(Optional) - an Optional name for the flow. This can be used to help in debugging flowsfrom= IO Reference to the data source that this connection comes fromto= IO Reference to a data sink that this connection goes to

Connections at multiple level in flow hierarchy

A flow is a hierarchy from the root flow down, including functions and sub-flows (collectively sub-processes).

Connections are defined within each flow or sub-flow from a source to a destination.

Within a flow sources include:

- an input of this flow

- an output from one of the sub-processes

and destinations include

- an input of one of the sub-processes

- an output of this flow

A connection may be defined with multiple destinations and/or there maybe multiple connections a one source or to a destination.

Connection "branching"

Within a sub-flow there may exist a connection to one of it's outputs, as a destination. At the next level up in the flow hierarchy that sub-flow output becomes a possible source for connections defined at that level.

Thus a single connection originating at a single source in the sub-flow may "branch" into multiple connections, reaching multiple destinations.

Connection feedback

It is possible to make a connection from a process's output back to one of its inputs. This is useful for looping, recursion, accumulation etc as described later in programming methods

Connection from input values

The input values used in an execution are made available at the output alongside the output value calculated, when completes execution. Thus a connection can be formed from this input value and the value is sent via connections when the function completes, similar to the output value. It is also possible to feedback this input value back to the same or different input for use in recursion. An example of this can be seen in the fibonacci example flow definition.

# Loop back the input value #2 from this calculation, to be the input to input #1 on the next iteration

[[connection]]

from = "add/i2"

to = "add/i1"

Connection Gathering and Collapsing

When a flow is compiled, sources of data (function outputs) are followed through the through layers of sub-flows/super-flow definition of the flow hierarchy and the resulting "tree" of connections to be eventually connected (possibly branching to become multiple connections) to destination(s).

The chain of connections involved in connecting a source to each of the destinations is "collapsed" as part of the compilation process, to leave a single connection from the source to each of the destinations.

Connection Optimizing

Thru flow re-use, come connections may end up not reaching any destination. The compiler optimizes these connections away by dropping them.

If in the process of dropping dead connections a function ends up not having any output and/or input (for "pure functions) it maybe removed, and an error or warning reported by the compiler.

IO References

An IO Reference uniquely identifies an Input/Data-source (flow/function) or an Output/Data-sink in the flow hierarchy.

If any flows or functions defined in other files are referenced with an alias, then it should be used in the IO references to inputs or outputs of that referenced flow/function.

Thus valid IO reference formats to use in connections are:

Data sinks

input/{input_name}(where input is a keyword and thus a sub-flow cannot be namedinputoroutput){sub_process_name}/{output_name}or{sub_process}for the default output

Where sub_process_name is a process referenced in this flow, and maybe a function or a sub-flow.

The reference use the process's name (if the process was not given an alias when referenced) or it's alias.

Data sinks

output/{output_name}(where output is a keyword and thus a sub-flow cannot be namedinputoroutput){sub_process_name}/{input_name}or{sub_process}for the default input

Selecting parts of a connection's value

A connection can select to "connect" only part of the data values passed on the source of the connection. See below Selecting sub-structures of an output for more details.

Run-time Semantics

An input IO can be connected to multiple outputs, via multiple connections.

An output IO can be connected to multiple inputs on other flows or functions via multiple connections.

When the data is produced on the output by a function the data is copied to each destination function using all the connections that exists from that output.

Data can be buffered at each input of a function.

The order of data arrival at a functions input is the order of creation of jobs executed by that function. However, that does not guarantee order of completion of the job.

A function cannot run until data is available on all inputs.

Loops are permitted from an output to an input, and are used as a feature to achieve certain behaviours.

When a function runs it produces a result that can contain an output. The result also contains all the inputs used to produce any output. Thus input values can be reused by connecting from this "output input-value" in connections to other processes, or looped back to an input of the same function.

Example, the fibonacci example uses this to define recursion.

...

# Loop back the input value #2 from this calculation, to be the input to input #1 on the next iteration

[[connection]]

from = "add/i2"

to = "add/i1"

...

Type Match

For a connection to be valid and used in execution of a flow, the data source must be found, the data sink must be found and the two must be of compatible DataTypes.

If those conditions are not met, then a connection will be dropped (with an error message output) and the flow will attempted to be built and executed without it.

By not specifying the data type on intermediary connections thru the flow hierarchy, the flow author can enable connections that are not constrained by the intermediate inputs/outputs used and those types are not need to be known when the flow is being authored. In this case the type check will pass on the intermediate connections to those "generic" inputs our output.

However, once the connection chain is collapsed down to one end-to-end connection, the source and destination types must also pass the type check. This includes intermediate connections that may select part of the value.

Example

- Subflow 1 has a connection: A function

serieswith default output Array/Number --> Generic output of the subflow- The destination of the connection is generic and so the intermediate type check passes

- Root flow (which contains Subflow 1) as a connection: Generic output of the subflow --> Function

addinputi1(which has a data typeNumber) that includes selection of an element of the array of numbers/1- The source is generic, so the intermediate type check passes

- A connection chain is built from the

seriesoutput thru the intermediate connection to theaddfunction inputi1 - The connection chain is collapsed to a connection from the Array element of index 1 of the

seriesfunction's output to theaddfunctions inputi1 - The

fromandtotypes of this collapsed connection are bothNumberand so the type check passes

Runtime type conversion of Compatible Types

The flow runtime library implements some type conversions during flow execution, permitting non-identical types from an output and input to be connected by the compiler, knowing the runtime will handle it.

These are know as compatible types. At the moment the following conversions are implemented but more

maybe added over time:

Matching Types

- Type 'T' --> Type 'T'. No conversion required.

Generics

- Generic type --> any input. This assumes the input will check the type and handle appropriately.

- Array/Generic type --> any input. This assumes the input will check the type and handle appropriately.

- any output --> Generic type. This assumes the input will check the type and handle appropriately.

- any output --> Array/Generic type. This assumes the input will check the type and handle appropriately.

Array Deserialization

- Array/'T' --> 'T'. The runtime will "deserialize" the array and send it's elements one-by-one to the input. NOTE that 'T' maybe any type, including an Array, which is just a special case.

- Array/Array/'T' --> 'T'. The runtime will "deserialize" the array of arrays and send elements one-by-one to the input

Array Wrapping

- 'T' --> Array/'T'. The runtime will take the value and wrap it in an array and send that one-element array to the input. Again, 'T' can be any type, including an Array.

- 'T' --> Array/Array/'T'. The runtime will take the value and wrap it in an array in an array and send that one-element array of arrays to the input.

Default input or output

If a function only has one input or one output, then naming that input/output is optional. If not names it is referred to as the default input. Connections may connect data to/from this input just by referencing the function.

Example, the stdout context function only has one input and it is not named

function = "stdout"

source = "stdout.rs"

docs = "stdout.md"

impure = true

[[input]]

and a connection to it can be defined thus:

[[connection]]

from = "add"

to = "stdout"

Named inputs

If an input is defined with a name, then connections to it should include the function name and the input name to define which input is being used.

Example

[[connection]]

from = "add"

to = "add/i2"

Selecting an output

When a function runs it produces a set of outputs, producing data on zero or more of it's outputs, all at once.

A connection can be formed from an output to another input by specifying the output's route as part of the

IO Reference in the from field of the connection.

Example:

[[connection]]

from = "function_name/output_name"

to = "stdout"

Selecting sub-structures of an output

As described in types, flow supports Json data types. This includes two "container types", namely: "object" (a Map) and "array".

If an output produces an object, a connection can be formed from an entry of the map (not the entire map) to a

destination input. This allows (say) connecting a function that produces a Map of strings to another function

that accepts a string. This is done extending the route used in the IO Reference of the connection with

the output name (to select the output) and the key of the map entry (to select just that map entry).

Example: function called "function" has an output named "output" that produces a Map of strings. One of those Map entries has the key "key". Then the string value associated with that key is used in the connection.

[[connection]]

from = "function/output/key"

to = "stdout"

Similarly, if the output is an array of values, a single element from the array can be specified in the connection

using a numeric subscript.

Example: function called "function" has an output named "output" that produces an array of strings. Then a single string from the array can be sent to a destination input thus:

[[connection]]

from = "function/output/1"

to = "stdout"

If a function runs and produces an output which does not contain the sub-structure selected by a connection, for the purpose of the destination of that connection it is just as if the output was not produced, or the function had not run. Thus, no value will arrive at the destination function and it will not run.

Connecting to multiple destinations

A single output can be connected to multiple destinations by creating multiple connections referencing the output.

But, to make it easier (less typing) to connect an output to multiple destinations the [[connection]] format

permits specifying more than one to = "destination".

Example

[[connection]]

from = "output"

to = ["destination", "destination2"]

Flow Libraries

Libraries can provide functions (definition and implementation) and flows (definition) that can be re-used by other flows.

An example library is the flowstdlib library, but others can be created and shared by developers.

Library structure

A flow library's structure is upto the developer to determine, starting with a src subdirectory, with optional

sub-directories for modules, and sub-modules.

Native crate structure

In order to support native linking of lib, it must be a valid rust crate and so a Cargo.toml file in the source

that references a lib.rs file, that in turn references mod.rs files in sub folder that reference the sources, so

that it is all included into the crate when compiled.

Example

[lib]

name = "flowstdlib"

path = "src/lib.rs"

Parallel WASM crate structure - WASM library build speed-up

Each function (see below) contains it's own Cargo.toml used to compile it to WASM. If left like this, then

each function will re-compile all of the source dependencies, even if many of the dependencies are shared across

all the functions, making the library compile to WASM very slow.

To speed up library builds, a solution ("hack") is used. A cargo workspace is defined in parallel with the Native

crate mentioned above, with it's root workspace Cargo.toml in the {lib_name}/src/

folder. This workspace includes as members references to all the Cargo.toml files of the functions (see below).

Thus when any of them are compiled they share a single target directory and the common dependencies are only

compiled once

Including a flow

Flow definition files may reside at any level. Example, the sequence flow definition

in the math module of the flowstdlib library.

Alongside the flow definition a documentation Markdown file (with .md extension) can be included. It should be

referenced in the flow definition file using the docs field (e.g. docs = "sequence.md").

Including a function

Each function should have a subdirectory named after function ({function_name}), which should include:

Cargo.toml- build file for rust implementations{function_name}.toml- function definition file. It should include these fieldstype = "rust"- type is obligatory and "rust" is the only type currently implementedfunction = "{function_name}"- obligatorysource = "{function_name}.rs"- obligatory and file must exist.docs = "{function_name}.md"- optional documentation file that if referenced must exist

{function_name}.md- if references in function definition file then it will be used (copied to output){function_name}.rs- referenced from function definition file. Must be valid rust and implement required traits

Compiling a library

Flow libraries are compiled using the flowc flow compiler, specifying the library root directory as the source url.

This will compile and copy all required files from the library source directory into a library directory. This directory is then a self-contained, portable library.

It can be packaged, moved, unpackaged and used elsewhere, providing it can be found by the compiler

and runtime (using either the default location $HOME/.flow/lib, FLOW_LIB_PATH env var or

-L, --libdir <LIB_DIR|BASE_URL> options).

The output directory structure will have the same structure as the library source (subdirs for modules) and will include:

manifest.json- Generated Library manifest, in the root of the directory structure*.md- Markdown source files copied into output directory corresponding to source directory*.toml- Flow and Function definition files copied into output directory corresponding to source directory*.wasm- Function WASM implementation compiled from supplied function source and copied into output directory corresponding to source directory*.dot- 'dot' (graphvis) format graph descriptions of any flows in the library source*.dot.svg- flow graphs rendered into SVG files from the corresponding 'dot' files. These can be referenced in doc files

Lib References

References to flows or functions are described in more detail in the process references section. Here we will focus on specifying the source for a process (flow or function) from a library using the "lib://" Url format.

The process reference to refer to a library provided flow or function is of the form:

lib://lib_name/path_to_flow_or_function

Breaking that down:

- "lib://" Url scheme identifies this reference as a reference to a library provided flow or function

- "lib_name" (the hostname of the Url) is the name of the library

- "path_to_flow_or_function" (the path of the Url) is the location withing the library where the flow or function resides.

By not specifying a location (a file with file:// or web resource with http:// or https://) allows the system

to load the actual library with it's definitions and implementation from different places in different flow

installations thus flows that use library functions are portable, providing the library is present and can be found

wherever it is being run.

The flowrlib runtime library by default looks for libraries in $HOME/.flow/lib, but can accept a "search path"

where it should also look (using the library's name "lib_name" from the Url)

Different flow runners (e.g. flowrcli or flowrgui or others) provide provide a command line option (-L)

to add entries to the search path.

Default locaiton

If the library you are referencing is in the default location ($HOME/.flow/lib) then there is no need to

configure the library search path or provide additional entries to it at runtime.

Configuring the Library Search Path

The library search path is initialized from the contents of the $FLOW_LIB_PATH environment variable.

This path maybe augmented by supplying additional directories or URLs to search using one

or more instances of the -L command line option.

Finding the references lib process

The algorithm used to find files via process references is described in more detail in the process references section. An example of how a library function is found is shown below.

A process reference exists in a flow with source = "flowstdlib://math/add"

- Library name =

flowstdlib - Function path within the library =

math/add

All the directories in the search path are searched for a top-level sub-directory that matches the library name.

If a directory matching the library name is found, the path to the process within the library is used to try and find the process definition file.

For example, if a flow references a process thus:

[[process]]

source = "flowstdlib://math/add"

Then the directory /Users/me/.flow/lib/flowstdlib is looked for.

If that directory is found, then the process path within the library stdio/stdin is used to create the full path

to the process definition file is /Users/me/.flow/lib/flowstdlib/math/add.

(refer to the full algorithm in process references)

If the file /Users/me/.flow/lib/flowstdlib/math/add.toml exists then it is parsed and made available to the flow

for use in connections.

Context Functions

Each flow runner application can provide a set of functions (referred to as context functions) to flows for

interacting with the execution environment.

They are identified by a flow defining a process reference that uses the context:// Url scheme.

(see process references for more details).

In order to compile a flow the compiler must be able to find the definition of the function.

In order to execute a flow the flow runner must either have an embedded implementation of the function or know how to load one.

Different runtimes may provide different functions, and thus it is not guaranteed that a function is present at runtime.

Completion of Functions

Normal "pure" functions can be executed any number of times as their output depends only on the inputs and the (unchanging) implementation. They can be run any time a set of inputs is available.

However, a context function may have a natural limit to the number of times it can be ran during the execution of a flow using it. An example would be a function that reads a line of text from a file. It can be ran as many times as there are lines of text in the file, then it will return End-Of-File and a flag to indicate to the flow runtime that it has "completed" should not be invoked again.

If this was not done, as the function has no inputs, it would always be available to run, and be executed indefinitely, just to return EOF each time.

For this reason, each time a function is run, it returns a "run me again" flag that the runtime uses to determine if it has "completed" or not. If it returns true, then the function is put into the "completed" state and it will never be run again (during that flow's execution)

Specifying the Context Root

At compile time the compiled must know which functions are available and their definitions.

Since it is the flow runner that provides the implementations and knows their definitions, it must make these discoverable and parseable by the compiler as a set of function definition files.

This is done by specifying to the flowc compiled what is called the context root or the root folder of

where the targeted runtime's context functions reside.

Context Function Process References

A reference to a context function process (in this case it is always a function) such as STDOUT is of the form:

[[process]]

source = "context://stdio/stdout"

The context:// Url scheme identifies it is a context function and it's definition should be sought below

the Context Root. The rest of the Url specifies the location under the Context Root directory (once found).

Example

The flow project directory structure is used in this example, with flow located at /Users/me/flow and

flow in the users $PATH.

The fibonacci example flow is thus found in the /Users/me/flow/flowr/examples/fibonacci directory.

The flowrcli flow runner directory is at /Users/me/flow/flowr/src/bin/flowrcli.

Within that folder flowrcli provides a set of context function definitions for a Command Line Interface (CLI)

implementation in the context sub-directory.

If in the root directory of the flow project, using relative paths, an example flow can be compiled and

run using the -C, --context_root <CONTEXT_DIRECTORY> option to flowc:

> flowc -C flowr/src/bin/flowrcli flowr/examples/fibonacci

The flowc compiler sees the "context://stdio/stdout" reference. It has been told that the Context Root is

at flowr/src/bin/flowrcli/context so it searches for (and finds) a function definition file at

flowr/src/bin/flowrcli/context/stdio/stdout/stdout.toml

using the alrgorithm described in process references.

Provided Functions

As described previously, flows can use provided functions provided by the flow runner app (e.g. flowrcli)

and by flow libraries.

However, a flow can also provide its own functions (a definition, for the compiler, and an implementation, for the runtime).

The process references section describes the algorithm for finding the function's files (definition and implementation) using relative paths within a flow file hierarchy.

Using relative paths means that flows are "encapsulated" and portable (by location) as they can be moved between directories, files systems and systems/nodes and the relative locations of the provided functions allow them to still be found and the flow compiled and ran.

Examples of Provided Functions

The flowr crate has two examples that provide functions as part of the flow:

- Reverse Echo in the folder

flowr/examples/reverse-echo- a simple example that provides a function to reverse a string - Mandlebrot in the folder

flowr/examples/mandlebrot- provides two functions:pixel_to_pointto do conversions from pixels to points in 2D imaginary coordinates spaceescapesto calculate the value of a point in the mandlebrot set

What a provided function has to provide

In order to provide a function as part of a flow the developer must provide:

Function definition file

Definition of the function in a TOML file.

Example escapes.toml

The same as any other function definition it must define:

function- field to show this is a function definition file and provide the function's namesource- the name of the implementation file (relative path to this file)type- to define what type of implementation is provided ("rust"is the only supported value at this time)input- the function's inputs - as described in IOsoutput- the function's outputs - as described in IOsdocs- Documentation markdown file (relative path)

Example escapes.md

Implementation

Code that implements the function of the type specified by type in the file specified by source.

Example: escapes.rs

This may optionally include tests, that will be compiled and run natively.

Build file

In the case of the rust type (the only type implemented!), a Cargo.toml file that is used to compile

the function's implementation to WASM as a stand-along project.

How are provided function implementations loaded and ran

If the flow running app (using the flowrliblibrary`) is statically linked, how can it load and then run the

provided implementation?

This is done by compiling the provided implementation to WebAssembly, using the provided build file. The .wasm

byte code file is generated when the flow is compiled and then loaded when the flow is loaded by flowrlib

Programming Methods

flow provides the following facilities to help programmers create flows:

Encapsulation

Functionality can be encapsulated within a function at the lowest level by implementing it in code, defining the function via a function definition file with it's inputs and outputs and describing the functionality provided by it in an associated markdown file.